DEEP LEARNING OF MICROSTRUCTURES

by

Amir Abbas Kazemzadeh Farizhandi

A dissertation

submitted in partial fulfillment

of the requirements for the degree of

Doctor of Philosophy in Computing, Data Science

Boise State University

December 2022

© 2022

Amir Abbas Kazemzadeh Farizhandi

ALL RIGHTS RESERVED

BOISE STATE UNIVERSITY GRADUATE COLLEGE

DEFENSE COMMITTEE AND FINAL READING APPROVALS

of the dissertation submitted by

Amir Abbas Kazemzadeh Farizhandi

Dissertation Title: Deep Learning of Microstructures

Date of Final Oral Examination: 19 October 2022

The following individuals read and discussed the dissertation submitted by student Amir Abbas Kazemzadeh Farizhandi, and they evaluated their presentation and response to questions during the final oral examination. They found that the student passed the final oral examination.

Mahmood Mamivand, Ph.D. Chair, Supervisory Committee

Edoardo Serra, Ph.D. Member, Supervisory Committee

Eric Jankowski, Ph.D. Member, Supervisory Committee

The final reading approval of the dissertation was granted by Mahmood Mamivand, Ph.D., Chair of the Supervisory Committee. The dissertation was approved by the Graduate College.

DEDICATION

I dedicate my dissertation work to my family. A special feeling of gratitude to my loving wife and parents, whose words of encouragement and push for tenacity ring in my ears.

ACKNOWLEDGMENTS

I would like to express my sincere thanks and appreciation to my supervisor, Dr. Mahmood Mamivand, for his invaluable guidance, support, and suggestions. His knowledge, suggestions, and discussions help me to become a capable researcher. His encouragement also helped me to overcome the difficulties encountered in my research. I would also like to thank Dr. Edoardo Serra and Dr. Eric Jankowski for serving as my committee members. I am very grateful to my lovely wife, who always supports me. Last but not least, I want to thank my parents, brother, and sister in Iran for their constant love and encouragement.

ABSTRACT

The internal structure of materials also called the microstructure plays a critical role in the properties and performance of materials. The chemical element composition is one of the most critical factors in changing the structure of materials. However, the chemical composition alone is not the determining factor, and a change in the production process can also significantly alter the materials’ structure. Therefore, many efforts have been made to discover and improve production methods to optimize the functional properties of materials.

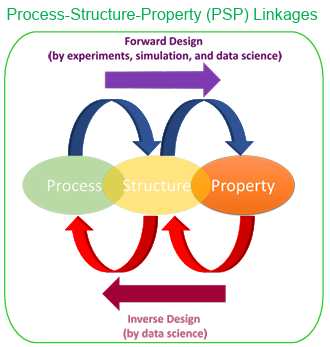

The most critical challenge in finding materials with enhanced properties is to understand and define the salient features of the structure of materials that have the most significant impact on the desired property. In other words, by process, structure, and property (PSP) linkages, the effect of changing process variables on material structure and, consequently, the property can be examined and used as a powerful tool in material design with desirable characteristics. In particular, forward PSP linkages construction has received considerable attention thanks to the sophisticated physics-based models. Recently, machine learning (ML), and data science have also been used as powerful tools to find PSP linkages in materials science. One key advantage of the ML-based models is their ability to construct both forward and inverse PSP linkages. Early ML models in materials science were primarily focused on process-property linkages construction. Recently, more micro structures are included in the materials design ML models. However, the inverse design of micro structures, i.e., the prediction of process and chemistry from a micro structure morphology image have received limited attention. This is a critical knowledge gap to address specifically for the problems that the ideal micro structure or morphology with the specific chemistry associated with the morphological domains are known, but the chemistry and processing which would lead to that ideal morphology are unknown.

In this study, first, we propose a framework based on a deep learning approach that enables us to predict the chemistry and processing history just by reading the morphological distribution of one element. As a case study, we used a dataset from

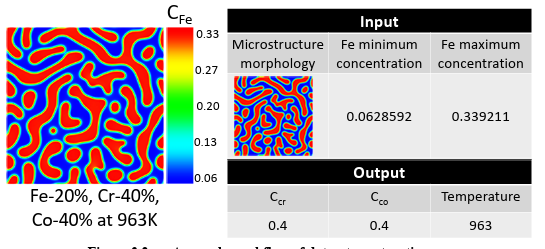

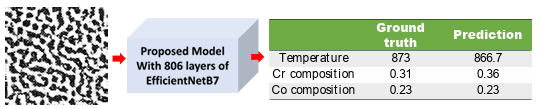

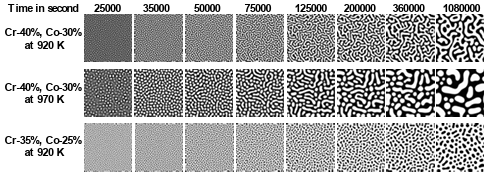

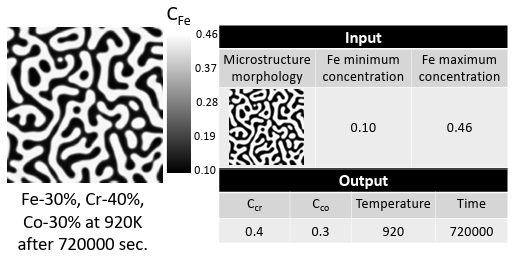

spinodal decomposition simulation of Fe-Cr-Co alloy created by the phase-field method. The mixed dataset, which includes both images, i.e., the morphology of Fe distribution, and continuous data, i.e., the Fe minimum and maximum concentration in the micro structures, are used as input data, and the spinodal temperature and initial chemical composition are utilized as the output data to train the proposed deep neural network. The proposed convolutional layers were compared with pretrained EfficientNet convolutional layers as transfer learning in micro structure feature extraction. The results show that the trained shallow network is effective for chemistry prediction. However, accurate prediction of processing temperature requires more complex feature extraction from the morphology of the micro structure. We bench marked the model predictive accuracy for real alloy systems with a Fe-Cr-Co transmission electron microscopy micro graph. The predicted chemistry and heat treatment temperature were in good agreement with the ground truth. The treatment time was considered to be constant in the first study. In the second work, we propose a fused-data deep learning framework that can predict the heat treatment time as well as temperature and initial chemical compositions by reading the morphology of Fe distribution and its concentration. The results show that the trained deep neural network has the highest accuracy for chemistry and then time and temperature. We identified two scenarios for inaccurate predictions; 1) There are several paths for an identical micro structure, and 2) Micro structures reach steady-state morphologies after a long time of aging. The error analysis shows that most of the wrong predictions are not wrong, but the other right answers. We validated the model successfully with an experimental Fe-Cr-Co transmission electron microscopy micro graph.

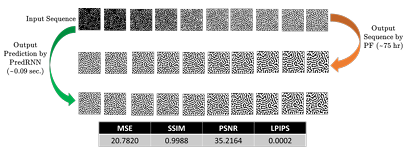

Finally, since the data generation by simulation is computationally expensive, we propose a quick and accurate Predictive Recurrent Neural Network (PredRNN) model for the micro structure evolution prediction. Essentially, micro structure evolution prediction is a spatiotemporal sequence prediction problem, where the prediction of material microstructure is difficult due to different process histories and chemistry. As a case study, we used a dataset from spinodal decomposition simulation of Fe-Cr-Co alloy created by the phase-field method for training and predicting future microstructures by previous observations. The results show that the trained network is capable of efficient prediction of microstructure evolution.

TABLE OF CONTENTS

DEDICATION …………………………………………………………………………………………………

ACKNOWLEDGMENTS …………………………………………………………………………………..

ABSTRACT …………………………………………………………………………………………………….

LIST OF TABLES …………………………………………………………………………………………..

LIST OF FIGURES ………………………………………………………………………………………..

LIST OF ABBREVIATIONS …………………………………………………………………………..

CHAPTER ONE: INTRODUCTION ……………………………………………………………………

Process-Structure-Property Linkages …………………………………………………………..

Materials Microstructure Evolution Prediction ……………………………………………..

Dissertation Structure ……………………………………………………………………………..

CHAPTER TWO: METHOD …………………………………………………………………………….

Phase Field Method ………………………………………………………………………………..

Dataset Generation …………………………………………………………………………………

Dataset Generation for Steady State Case Study ……………………………….

Dataset Generation for Unsteady State Case Study ……………………………

Dataset Generation for Microstructure Evolution Case Study………………

Deep Learning Methodology ……………………………………………………………………

Fully-Connected Layers………………………………………………………………..

Convolutional Neural Networks (CNN) …………………………………………..

Proposed Model for Steady State Case Study ……………………………………………..

Proposed Model for Unsteady State Case Study ………………………………………….

Proposed Model for Microstructure Evolution Prediction …………………………….

Measure the Similarity Between Images ……………………………………………………

CHAPTER THREE: DEEP LEARNING APPROACH FOR CHEMISTRY AND PROCESSING HISTORY PREDICTION FROM MATERIALS MICROSTRUCTURE

…………………………………………………………………………………………………………………….

Phase-Field Modeling and Dataset Generation ……………………………………………

Convolutional Layers for Feature Extraction ………………………………………………

Temperature and Chemical Compositions Prediction …………………………………..

Validation of The Proposed Model with The Experimental Data ……………………

Conclusion …………………………………………………………………………………………..

Data availability ……………………………………………………………………………………

CHAPTER FOUR: PROCESSING TIME, TEMPERATURE, AND INITIAL CHEMICAL COMPOSITION PREDICTION FROM MATERIALS MICROSTRUCTURE BY DEEP NETWORK FOR MULTIPLE INPUTS AND FUSED

DATA ……………………………………………………………………………………………………………

Phase-Field Modeling and Dataset Generation ……………………………………………

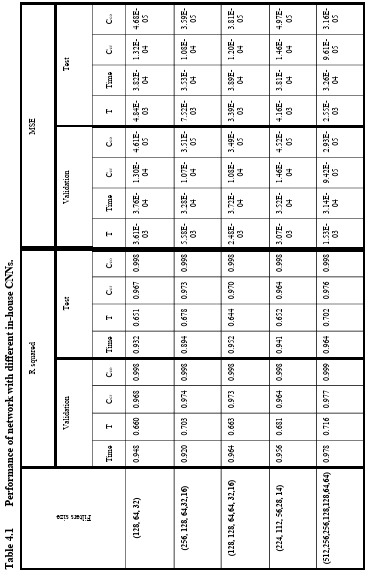

Deep Network Training ………………………………………………………………………….

Model Performance Analysis …………………………………………………………………..

Validation of The Proposed Model with the Experimental Data …………………….

Conclusion …………………………………………………………………………………………..

Data availability ……………………………………………………………………………………

CHAPTER FIVE: SPATIOTEMPORAL PREDICTION OF MICROSTRUCTURE EVOLUTION WITH PREDICTIVE RECURRENT NEURAL NETWORK …………….

Phase-Field Modeling for Microstructure Sequences Generation …………………..

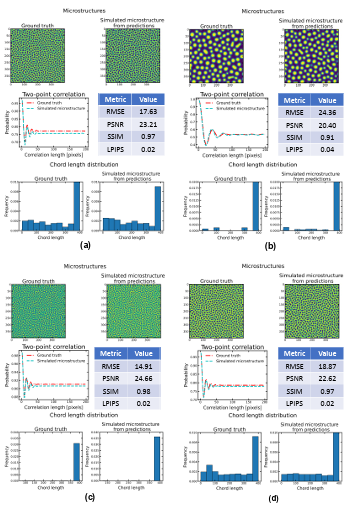

Microstructure Evolution Prediction by PredRNN ……………………………………….

Trained Model Performance on The Microstructure Evolution Prediction During Time ……………………………………………………………………………………………………

Trained Model Inference Performance in Future Microstructures Prediction ……

Conclusion ……………………………………………………………………………………………

Data availability …………………………………………………………………………………….

CONCLUSION AND FUTURE WORKS ……………………………………………………………

Future Works ………………………………………………………………………………………..

REFERENCES ………………………………………………………………………………………………..

LIST OF TABLES

Table 2.1 Phase-field model input parameters [100, 102, 103] ………………………….

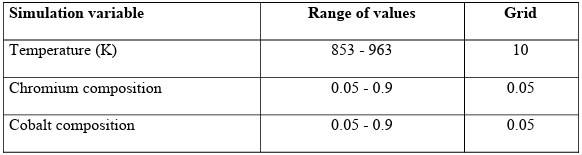

Table 2.2 Simulation variables and their range of values for database generation of steady state case study. …………………………………………………………………

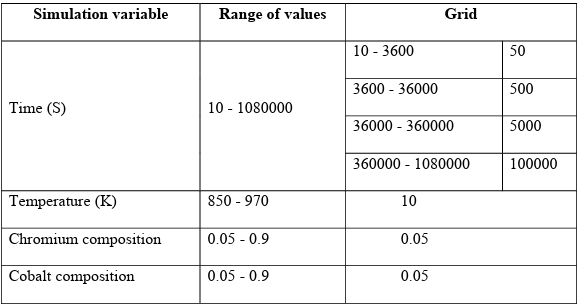

Table 2.3 Simulation variables and their range of values for database generation of unsteady state case study. ……………………………………………………………..

Table 2.4 Parameters selected for model specification, compilation, and cross validation. ………………………………………………………………………………….

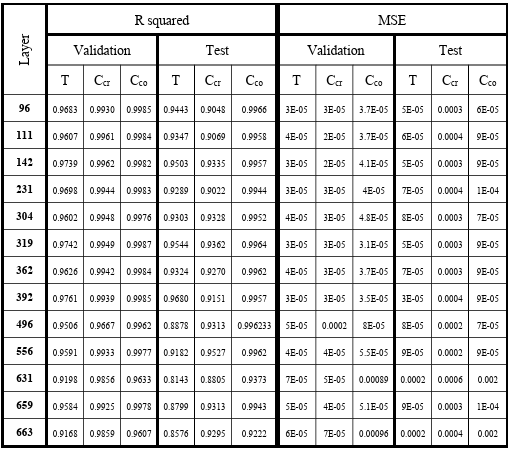

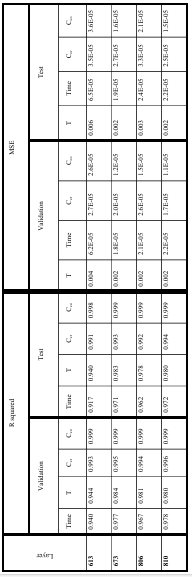

Table 3.1 R-squared and MSE of model predictions for training and testing dataset when different layers of EfficientNet-B6 are used for microstructures’ feature extraction. ……………………………………………………………………….

Table 3.2 R-squared and MSE of model predictions for training and testing dataset when different layers of EfficientNet-B7 are used for microstructures’ feature extraction. ……………………………………………………………………….

Table 4.1 Performance of network with different in-house CNNs. …………………….

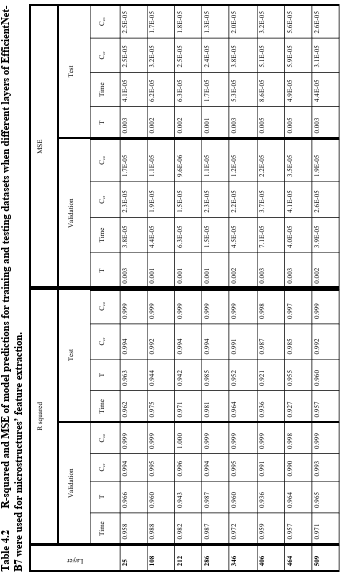

Table 4.2 R-squared and MSE of model predictions for training and testing datasets when different layers of EfficientNet-B7 were used for microstructures’ feature extraction. ……………………………………………………………………….

LIST OF FIGURES

Figure 1.1 Schematic of materials design workflow by forward and inverse design using PSP linkages. ……………………………………………………………………….

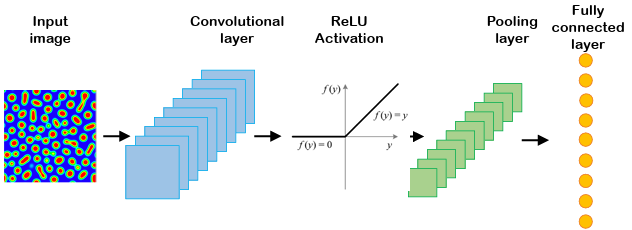

Figure 2.1 Schematic of a typical convolutional neural network. ………………………..

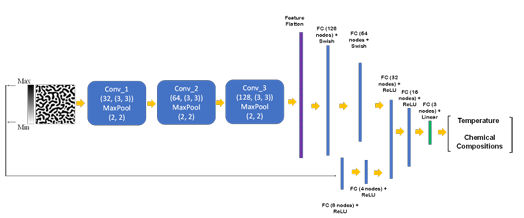

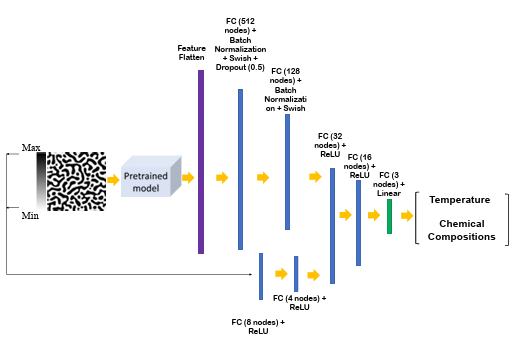

Figure 2.2 The flowchart of the developed model for chemistry and processing history prediction from microstructure images (FC: fully-connected layer) …………………………………………………………………………………………………

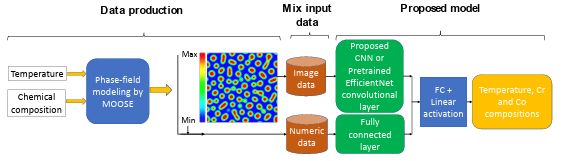

Figure 2.3 The flowchart of the developed model for chemistry and processing history prediction from microstructure images (FC: fully-connected layer)…………………………………………………………………………………………………

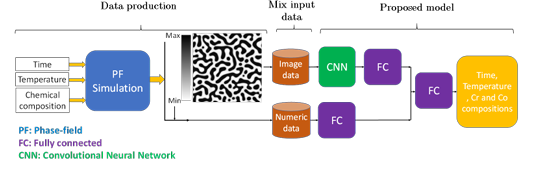

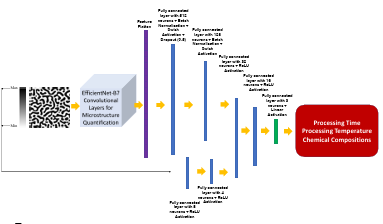

Figure 2.4 The flowchart of the developed model for chemistry, time, and temperature prediction from microstructure images (FC: fully-connected layer) …………………………………………………………………………………………

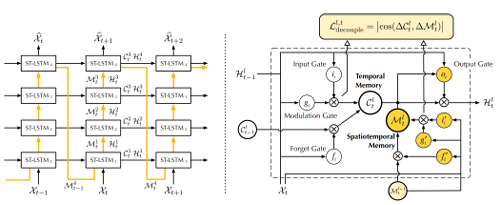

Figure 2.5 Left: the main architecture of PredRNN, in which the orange arrows denote the state transition paths of Ml t, namely the spatiotemporal memory flow. Right: the ST-LSTM unit with twisted memory states serves as the building block of the proposed PredRNN, where the orange circles denote the unique structures compared with ConvLSTM (the figure was adopted from the original study [157]). ……………………………………………………….

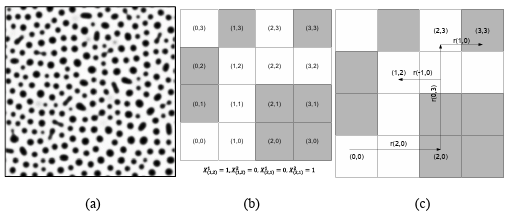

Figure 2.6 (a) A real two-phase microstructure, (b) and (c) a simple checkerboard microstructure for presenting X_uv and two-point correlation (white color is phase 1 and black color is phase 2) ………………………………………………

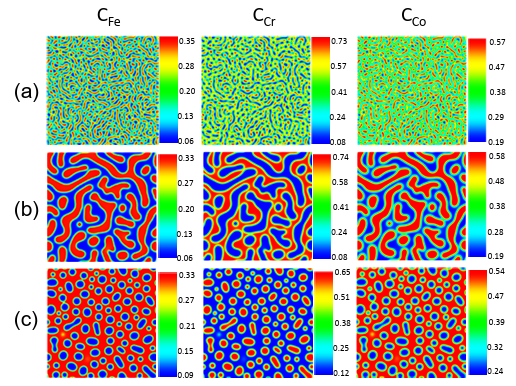

Figure 3.1 Fe-Cr-Co alloys microstructure generated by the phase-field method for:a) Fe-20%, Cr-40%, Co-40% at 873K, b) Fe-20%, Cr-40%, Co-40% at963K, c) Fe-25%, Cr-30%, Co-45% at 933K. (Composition are in atomic percent). …………………………………………………………………………………….

Figure 3.2 A sample workflow of dataset construction………………………………………

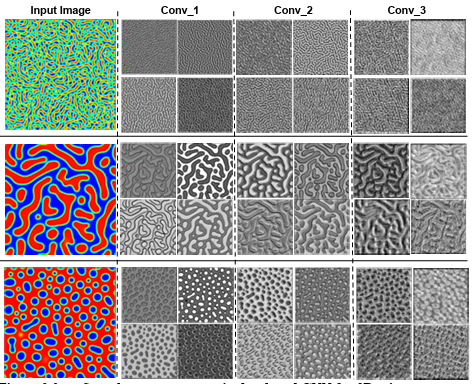

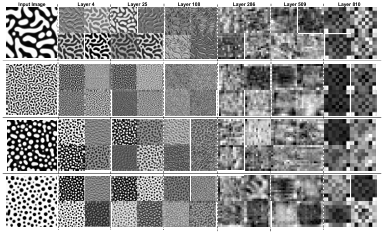

Figure 3.3 Sample response maps in developed CNN for 2D microstructure morphology inputs. The response map of the first four filters of three

convolutional layers is illustrated for three input images. The layer

numbers are presented at the top of the images. ………………………………..

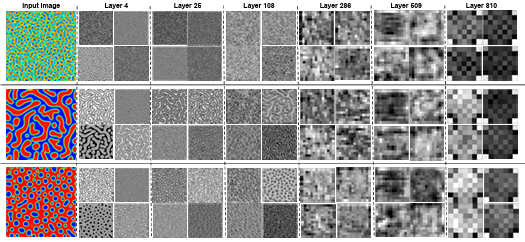

Figure 3.4 Sample response maps in EfficientNetB7 for 2D microstructure morphology inputs. The response map of the first four filters of some convolutional layers is illustrated for three input images. The layer numbers are presented at the top of the images. ………………………………..

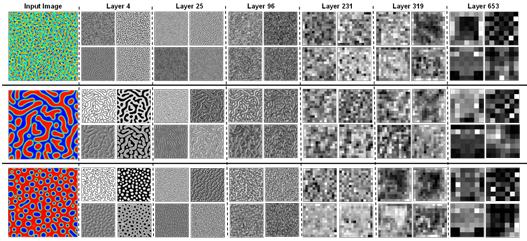

Figure 3.5 Sample response maps in EfficientNetB6 with 2D microstructure inputs. The response map of the first four filters of some convolutional layers is illustrated for three input images. The layer number is presented at the top of the figure. ………………………………………………………………………………

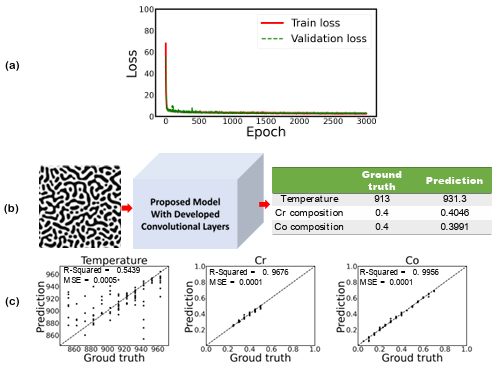

Figure 3.6 The architecture of the proposed model (input image size is 224 × 224 pixels). ………………………………………………………………………………………

Figure 3.7 a) Training and validation loss per each epoch, b) prediction of temperature and chemical compositions for a test dataset, and c) the parity plots of temperature and chemical compositions for the testing dataset from the proposed model when proposed CNN are used for microstructures’ feature extraction (input image size is 224 × 224 pixels)

………………………………………………………………………………………………..

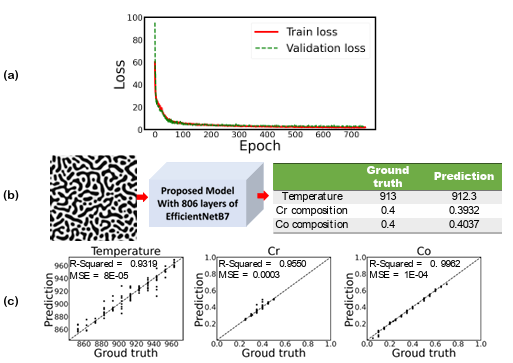

Figure 3.8 a) Training and validation loss per each epoch, b) prediction of temperature and chemical compositions for a random test dataset, and c) the parity plots of temperature and chemical compositions for the testing dataset from the proposed model when first 806 layers of EfficientNetB7 are used for microstructures’ feature extraction (The size of the input images are 224 × 224 pixels) …………………………………………………………

Figure 3.8 a) Training and validation loss per each epoch, b) prediction of temperature and chemical compositions for a test dataset, and c) the parity plots of temperature and chemical compositions for the testing dataset from the proposed model when proposed CNN are used for micro structures’ feature extraction (input image size is 224 × 224 pixels)

………………………………………………………………………………………………..

Figure 3.10 Prediction of chemistry and processing temperature for an experimental TEM image adopted from Okada et al. [182]. The original image was cropped to be in the desired size of 224 × 224 pixels. ………………………..

Figure 4.1 The phase-field method generates Fe-Cr-Co alloy microstructures (Compositions are in atomic percent). …………………………………………….

Figure 4.2 A sample workflow of dataset construction. …………………………………….

Figure 4.3 Sample response maps in EfficientNetB7 with 2D microstructure inputs. The response map of the first four filters of some convolutional layers is illustrated for four input images. The layer number is presented at the top

of the figure. ……………………………………………………………………………….

Figure 4.4 The architecture of the proposed model (input image size is 224 × 224 pixels). ………………………………………………………………………………………

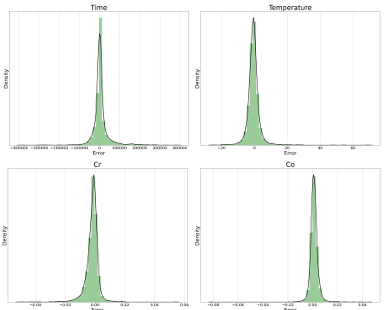

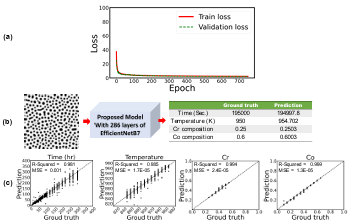

Figure 4.5 Error distribution for the testing dataset from the proposed model when the first 286 layers of EfficientNetB7 are used for microstructures’ feature extraction (The size of the input images is 224 × 224 pixels) ………………

Figure 4.6 a) Training and validation loss per each epoch, b) prediction of time, temperature, and chemical compositions for a random test dataset, and c) the parity plots for time, temperature, and chemical compositions for the testing dataset based on the transfer learning model when the first 286 layers of EfficientNetB7 are used for microstructures’ feature extraction (The size of the input images are 224 × 224 pixels) …………………………..

Figure 4.7 Different microstructures a) at constant time and temperature, b) at constant time and chemical compositions, and c) at constant temperature and chemical compositions. …………………………………………………………..

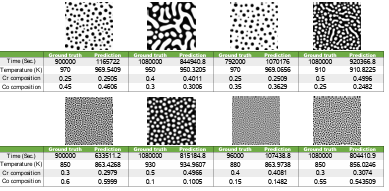

Figure 4.8 Some worst cases for time (first row of images) and temperature (second row of images) predictions…………………………………………………………….

Figure 4.9 Comparison of the ground truth microstructures with the simulated microstructures from model predictions for four random cases with high errors in time. ……………………………………………………………………………..

Figure 4.10 Comparison of the ground truth microstructures with the simulated microstructures from model predictions for four random cases with errors in time and temperature. ……………………………………………………………….

Figure 4.11 Prediction of processing time, temperature, and chemistry for an experimental TEM image adopted from Okada et al. [182]. The original image was cropped to be in the desired size of 224 × 224 pixels. …………

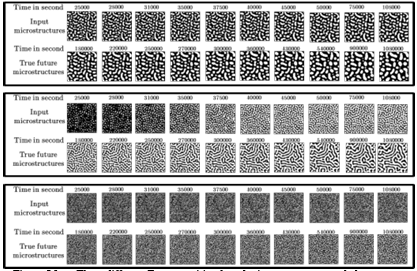

Figure 5.1 Three different Fe-composition-based microstructure morphology sequences …………………………………………………………………………………..

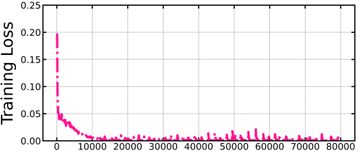

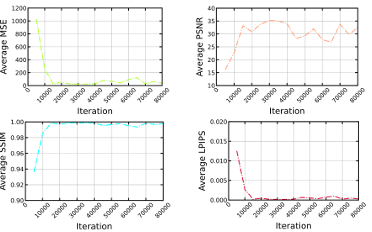

Figure 5.2 Training loss per iteration ……………………………………………………………..

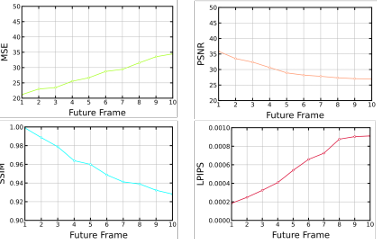

Figure 5.3 Average MSE, PSNR, SSIM, and LPIPS for test sequences during training per each iteration …………………………………………………………………………

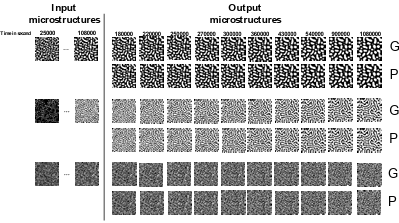

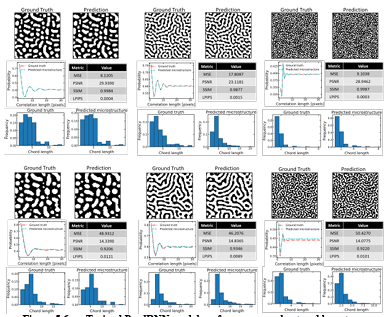

Figure 5.4 Frame-wise results on the three randomly selected samples from the test set produced by the final PredRNN model (predictions (P) vs. ground truth (G)) …………………………………………………………………………………………..

Figure 5.5 Frame-wise results on the test set produced by final PredRNN model ….

Figure 5.6 Trained PredRNN model performance on short and long-term prediction for three randomly selected samples from the test set ………………………..

Figure 5.7 Comparison of trained PredRNN model speed with PF simulation on a randomly selected sample from the test set ………………………………………

LIST OF ABBREVIATIONS

ML Machine Learning

3D Three-Dimensional

2D Two-Dimensional

PF Phase Field

AI Artificial Intelligence

DL Deep Learning

RNN Recurrent Neural Network

CNN Convolutional Neural Network

LSTM Long Short-Term Memory

PCA Principal Component Analysis

RVE Representative Volume Element

TEM Transmission Electron Microscopes

MSE Mean Squared Error

PSNR Peak Signal-to-Noise Ratio

SSIM Structural Similarity Index

LPIPS Learned Perceptual Image Patch Similarity

CHAPTER ONE: INTRODUCTION

This Ph.D. dissertation aims to develop a framework based on deep learning (DL) to enable chemistry and process history prediction behind a microstructure as well as microstructure morphology prediction through microstructure evolution. The developed models enable the prediction of processing history and chemical compositions from microstructure images and predict micro structure morphologies without expensive and time-consuming simulations and experiments. Doing so will provide the materials science community with knowledge and algorithms that can be used for new materials development with the desired properties. In this way, we reviewed the previous studies for using machine learning in the construction of PSP linkages and material microstructure evolution prediction.

Process-Structure-Property Linkages

Heterogeneous materials are widely used in various industries, such as aerospace, automotive, and construction. These materials’ properties greatly depend on their microstructure, which is a function of the chemical composition and operational process of materials production. To accelerate the novel materials design process, the construction of process-structure-property (PSP) linkages is necessary. Establishing PSP linkages with sole experiments is not practical as the process is costly and time consuming. Therefore, computational methods are used to study the structure of materials and their properties. A basic assumption for computational modeling of materials is that they are periodic on the microscopic scale and can be approximated by representative elements (RVE) [1]. Finding the effects of process conditions and the chemical composition on the characteristics of the RVE, such as volume fraction, microstructure, grain size, and, consequently, the materials’ properties, will lead to the development of PSP linkages. In the past two decades, the phase-field (PF) method has been increasingly used as a robust method for studying the spatiotemporal evolution of the materials’ microstructure and physical properties [2].

It has been widely used to simulate different evolutionary phenomena, including grain growth and coarsening [3], solidification [4], thin-film deposition [5], dislocation dynamics [6], vesicle formation in biological membranes [7], and crack propagation [8]. PF models solve a system of partial differential equations (PDEs) for a set of continuous variables of the processes. However, solving high-fidelity PF equations is inherently computationally expensive because it requires solving several coupled PDEs simultaneously [9]. Therefore, PSP construction, particularly for complex materials, only based on the PF method is inefficient. To address this challenge, machine learning (ML) methods have recently been proposed as an alternative for creating PSP linkages based on the limited experimental/simulation data or both [10]. Artificial intelligence (AI), ML, and data science are beneficial in speeding up and simplifying the process of discovering new materials [11]. Developing and deploying an appropriate support data infrastructure that efficiently integrates closed-loop iterations between experimentation and multi-scale modeling/simulation efforts is climacteric. This need is addressed by a new interdisciplinary field called Materials Data Science and Informatics [12-18].

A fundamental element of the data science approach is a multi-faceted framework that enables the research community to collect, aggregate, nurture, disseminate, and reuse valuable knowledge. In materials innovation efforts, this knowledge is primarily desired in the form of length and time scale PSP linkages associated with the material system of interest [19-24]. In a multi-scale materials modeling effort, this means developing a formal data science approach to extract reusable PSP linkages from an ensemble of simulation and experiment datasets, as depicted in Figure 1.1.

The top arrow in Figure 1.1, forward design philosophy, shows a typical workflow that materials scientists historically have used in developing PSP linkages. In forward design philosophy, we loop through the ordered connection of process-structure-property. Forward design usually involves the use of experiments and advanced physics in combination with numerical algorithms. Generally, since material discovery requires exploration of big space, the forward design is prone to result in high costs and time. This cost can be a significant obstacle to materials innovation efforts, even in the realm of simulations, as these simulations are often expensive, and the design space is huge. This is precisely where the data science approaches offer many benefits. As shown in Figure 1.1, the data science tools and algorithms can enable us to perform inverse design, i.e., start from the desired properties and find the required processing. With the full advantage of advanced statistics and machine learning techniques, data science can provide a mathematically rigorous framework for PSP linkage in multi-scale material design. As depicted in Figure 1.1, one of the main benefits of adding data science components to the entire workflow is that it is very practical to solve the inverse problem, which is the ultimate goal of materials innovation efforts.

In fact, materials informatics provides a low computational approach for materials design. This is mainly because the PSP linkages are cast as metamodels or surrogate models. These models can be easily used to find the optimum conditions for making materials with desired properties.

In recent years, using data science in various fields of materials science has increased significantly [25-30]. For instance, data science is applied to help density functional theory calculations to establish a relationship between atoms’ interaction with the properties of materials based on the quantum mechanics [31-34]. AI is also utilized to establish PSP linkages in the context of materials mechanics. In this case, ML can be used to design new materials with desired properties or employed to optimize the production process of the existing materials for properties improvement. Through data science, researchers will be able to examine the complex and nonlinear behavior of a materials production process that directly affects the materials’ properties [35]. Many studies have focused on solving cause-effect design, i.e., finding the material properties from the microstructure or processing history. These studies have attempted to predict the structure of the materials from processing parameters or materials properties from the microstructure and processing history [10, 25, 36-43]. A less addressed but essential problem is a goal-driven design that tries to find the processing history of the materials from their microstructures. In these cases, the optimal microstructure that provides the optimal properties is known, e.g., via physics-based models, and it is desirable to find the chemistry and processing routes that would lead to the desirable microstructure.

Figure 1.1 Schematic of materials design workflow by forward and inverse design using PSP linkages.

The use of microstructure images in ML modeling is challenging. The microstructure quantification has been reported as the central nucleus in the PSP linkages construction [37]. Microstructure quantification is important from two perspectives. First, it can increase the accuracy of the developed data-driven model. Second, an in-depth understanding of the microstructures can improve the comprehension of the effects of process variables and chemical composition on the properties of materials [37]. In recent years, deep learning (DL) methods have been successfully used in other fields, such as computer vision. Their limited applications in materials science have also proven them as reliable and promising methods [38]. The main advantages of DL methods are their simplicity, flexibility, and applicability for all types of microstructures. Furthermore, DL has been broadly applied in materials science to improve the targeted properties [34, 39 46].

One form of DL models that has been extensively used for feature extraction in various applications such as image, video, voice, and natural language processing is Convolutional Neural Networks (CNN) [47-50]. In materials science, CNN has been used for various image-related problems. Cang et al. used CNN to achieve a 1000-fold dimension reduction from the microstructure space [51]. DeCost et al. [52] applied CNN for microstructure segmentation. Xie and Grossman [53] used CNN to quantify the crystal graphs to predict the material properties. Their developed framework was able to predict eight different material properties such as formation energy, bandgap, and shear moduli with high accuracy. CNN has also been employed to index the electron backscatter diffraction patterns and determine the crystalline materials’ crystal orientation [54].

The stiffness in two-phase composites has been predicted successfully by the deep learning approach, including convolutional and fully-connected layers [55]. In a comparative study, the CNN and the materials knowledge systems (MKS), proposed in the Kalidindi group based on the idea of using the n-point correlation method for microstructures quantification [56-58], were used for microstructure quantification and then, the produced data were employed to predict the strain in the microstructural volume elements. The comparison showed that the extracted features by CNN could provide more accurate predictions [59]. Cecen et al. [20] proposed CNN to find the salient features of a collection of 5900 microstructures. The results showed that the obtained features from CNN could predict the properties more accurately than the 2-point correlation, while the computation cost was also significantly reduced. Comparing DL approaches, including CNN, with the MKS method, single-agent, and multi-agent methods shows that DL always performs more accurately [59-61]. Zhao et al. utilized the electronic charge density (ECD) as a generic unified 3D descriptor for elasticity prediction.

The results showed a better prediction power for bulk modulus than for shear modulus [62]. CNN has also been applied for finding universal 3D voxel descriptors to predict the target properties of the solid-state material [63]. The introduced descriptors outperformed the other descriptors in the prediction of Hartree energies for solid-state materials.

Training a deep CNN usually requires an extensive training dataset that is not always available in many applications. Therefore, a transfer learning method that uses a pretrained network can be applied for new applications. In transfer learning, all or a part of the pretrained networks such as VGG16, VGG19 [64], Xception [65], ResNet [66], and Inception [67], which were trained by the computer vision research community with lots of open source image datasets such as ImageNet, MS, CoCo, and Pascal, can be used for the desired application. In particular, in materials science which generally the image based data are not greatly abundant, transfer learning could be beneficial. DeCost et al. [68] adopted VGG16 to classify the microstructures based on their annealing conditions. Ling et al. [25] applied VGG16 to extract the feature from scanning electron microscope (SEM) images and classify them. Lubbers et al. [69] used the VGG 19 pretrained model to identify the physical meaningful descriptors in microstructures. Li et al. [70] proposed a framework based on VGG19 for microstructure reconstruction and structure-property predictions. The pretrained VGG19 network was also utilized to reconstruct the 3D microstructures from 2D microstructures by Bostanabad [71].

Review provided above shows that the majority of the ML-microstructure related works in the materials science community were primarily focused on using ML techniques for microstructure classification [72-74], recognition [75], microstructure reconstruction [70, 71], or as a feature-engineering-free framework to connect microstructure to the properties of the materials [55, 76, 77]. However, the process and chemistry prediction from a microstructure morphology image has received limited attention.

This is a critical knowledge gap to address specifically for the problems in them the ideal microstructure or morphology with the specific chemistry associated with the morphology domains are known, but the chemistry and processing which would lead to that ideal morphology is unknown. The problem becomes much more challenging for multicomponent alloys with complex processing steps. Recently, Kautz et al. [77] have used the CNN for microstructure classification and segmentation on Uranium alloyed with 10 wt% molybdenum (U-10Mo). They used the segmentation algorithm to calculate the area fraction of the lamellar transformation products of α-U + γ-UMo, and by feeding the total area fraction into the Johnson-Mehl-Avrami-Kolmogorov equation, they were able to predict the annealing parameters, i.e., time and temperature. However, Kautz et al.’s [77] work for aging time prediction did not consider the morphology and particle distribution, and also, no chemistry was involved in the model.

To address the knowledge gap, in this work, we develop a mixed-data deep neural network that is capable to predict the chemistry and processing history of a micrograph. The model alloy used in this work is Fe-Cr-Co permanent magnets.

Materials Microstructure Evolution Prediction

The processing-structure-property relationship of engineered materials is directly impacted by material microstructures, which are mesoscale structural elements that operate as an essential link between atomistic building components and macroscopic qualities. One of the pillars of contemporary materials research is the ability to manage the evolution of the material’s microstructure while it is being processed or used, including common phenomena like solidification, solid-state phase transitions, and grain growth.

Therefore, a key objective of computational materials design has been comprehending and forecasting of microstructure evolution. Simulations of microstructure evolution frequently rely on phase separation or coarse-grained models, such as partial differential equations (PDEs), which are used in the phase-field techniques [2, 78] because they can represent time and length scales that are far larger than those that can be captured by molecular dynamics. A wide range of significant evolutionary mesoscale processes, including grain development and coarsening, solidification, thin film deposition, dislocation dynamics, vesicle formation in biological membranes, and crack propagation, have all been fully described using the phase-field method [3-8].

However, there are some significant problems with this strategy as well. First off, PDE based microstructure simulations are still relatively expensive. The stability of numerical techniques that use explicit time integration for nonlinear PDEs sets stringent upper bounds on the smallest time-step size in the temporal dimension. Similarly, implicit time-integration techniques manage longer time steps by adding more inner iteration loops at each step. Furthermore, despite the fact that in theory controlling PDEs can be inferred from the underlying thermodynamic and kinetic considerations, actual PDE identification, parametrization, and validation take a significant amount of work.

The evolution principles may not be fully understood or may be too complex to be characterized by tractable PDEs for difficult or less well-studied materials. Currently, the efforts to reduce computational costs have mostly concentrated on utilizing high-performance computer architectures [79-82] and sophisticated numerical techniques [83, 84], or on merging machine learning algorithms with simulations based on microstructures [43, 85-91]. Leading studies, for instance, have developed surrogate models using a variety of techniques, such as Green’s function solution [85], Bayesian optimization [43, 86], a combination of dimensionality reduction and autoregressive Gaussian processes [88], convolutional autoencoder and decoder [92], or integrating a history-dependent machine-learning method with a statistically representative, low dimensional description of the microstructure evolution generated directly from phase-field simulations that can quickly predict the evolution of the microstructure from phase-field simulations [9].

The main problem, however, has been to strike a balance between accuracy and computing efficiency, even for these successful systems. For complex, multi-variable phase-field models, for example, precise answers cannot be guaranteed by the computationally effective Green’s function solution. In contrast, complex, coupled phase-field equations can be solved using Bayesian optimization techniques, however, at a higher computational cost (although the number of simulations required is kept to a minimum because the Bayesian optimization protocol determines the parameter settings for each subsequent simulation). The capacity of this class of models to predict future values outside of the training set is constrained by the fact that autoregressive models can only forecast microstructural evolution for the values for which they were trained. In other models based on dimensionality reduction methods like principle component analysis (PCA), a large amount of information is ignored, which will sacrifice accuracy. This study uses Predictive Recurrent Neural Network (PredRNN) [93] to forecast how the microstructure represented by 2D image sequences will change over time.

Dissertation Structure

The dissertation outline is as follows. In chapter two, we describe the methods used in this study, like PF, deep learning methods, train and test data generation, and error metrics.

In chapter three, we develop a mixed-data deep neural network capable of predicting a micrograph’s chemistry and processing history. The model alloy used in this work is Fe-Cr-Co permanent magnets. These alloys experience spinodal decomposition at temperatures around 853 – 963 K. We use the PF method to create the training and test dataset for the DL network. CNN will quantify the produced microstructures by the PF method; then the salient features will be used by another deep neural network to predict the temperature and chemical composition.

In chapter four, we explain a model based on a deep neural network to predict a complete set of processing parameters, including temperature, time, and chemistry from a micro structure micrograph. As a case study, we focused on the spinodal decomposition process, and to prove the model applicability for realistic alloys, we picked the Fe-Cr-Co permanent magnets as the model alloy. We used the PF method to create the training and test datasets for deep network training. A fused dataset including material microstructure as well as minimum and maximum iron concentration in the microstructure is used as the input data. We quantified the generated microstructures with the CNN and then combined the extracted salient features from the microstructures with iron composition to predict the processing history, i.e., annealing time and temperature, and chemical compositions of the micrograph.

In chapter five, we describe the microstructure evolution prediction by PredRNN. In this study, spinodal decomposition is used as a case study. Spinodal decomposition occurs in two separate phases: a quick composition modulation growth phase, followed by a slower coarsening phase, during which the Gibbs-Thomson effect causes a progressive rise in the length scale of the phase-separation pattern. We demonstrate that PredRNN can precisely capture all the required features from earlier microstructures to predict long-term microstructures. This result is particularly important because it can predict morphology evolution in both phases.

Finally, the key findings in this thesis are summarized and possible opportunities for future works are discussed.

This dissertation leads to the following peer-reviewed and conference papers;

1. Amir Abbas Kazemzadeh Farizhandi, Mahmood Mamivand, Processing time, temperature, and initial chemical composition prediction from materials microstructure by deep network for multiple inputs and fused data, Materials &

Design, Volume 219, 2022, 110799, ISSN 0264-1275,

https://doi.org/10.1016/j.matdes.2022.110799.

2. Amir Abbas Kazemzadeh Farizhandi, Omar Betancourt, Mahmood Mamivand. Deep learning approach for chemistry and processing history prediction from materials microstructure. Sci Rep 12, 4552 (2022).

https://doi.org/10.1038/s41598-022-08484-7.

3. Amir Abbas Kazemzadeh Farizhandi, Mahmood Mamivand, Spatiotemporal Prediction of Microstructure Evolution with Predictive Recurrent Neural Network, Submitted to Materials & Design.

4. Amir Abbas Kazemzadeh Farizhandi, Mahmood Mamivand, Chemistry and Processing History Prediction from Materials Microstructure by Deep Learning, 2022 TMS Annual Meeting & Exhibition, Symposium: Algorithm Development in Materials Science and Engineering.

5. Amir Abbas Kazemzadeh Farizhandi, Mahmood Mamivand, Spatiotemporal Prediction of Microstructure by Deep Learning, 2022 TMS Annual Meeting & Exhibition, Symposium: AI/Data Informatics: Computational Model Development, Validation, and Uncertainty Quantification.

6. Smith, Leo, Mahmood Mamivand, Amir Abbas Kazemzadeh Farizhandi, and Carl Agren. “Prediction of Onsager and Gradient Energy Coefficients from Microstructure Images with Machine Learning.” Idaho Conference on Undergraduate Research (2022).

7. Keynote Speaker: Mahmood Mamivand, Amir Abbas Kazemzadeh, “Microstructural mediated materials design with deep learning”, International Conference on Plasticity, Damage, and Fracture, Jan 2023

8. Invited Talk: Mahmood Mamivand, Amir Abbas Kazemzadeh, “Chemistry and Processing History Prediction from Microstructure Morphologies”, TMS March 2023

CHAPTER TWO: METHOD

In this chapter, we describe the models and algorithms that we have used in this dissertation. First, we briefly describe the Phase Field (PF) model for spinodal decomposition simulation and the dataset generation process. Then, we provide the details of the proposed fused-data deep neural network for process history and chemistry prediction from materials microstructure morphologies. Finally, we explain the LSTM model that has been used to predict the evolution of material microstructures along with an overview of methods to quantify the similarity between microstructure morphologies.

Phase Field Method

With the enormous increase in computational power and advances in numerical methods, the PF approach has become a powerful tool for the quantitative modeling of microstructures’ temporal and spatial evolution. Some applications of this method include modeling materials undergoing martensitic transformation [94], crack propagation [95], grain growth [96], and materials microstructure prediction for optimization of their properties [97].

The PF method eliminates the need for the system to track each moving boundary by having the interfaces to be of finite width where they gradually transform from one composition or phase to another [2]. This essentially causes the system to be modeled as a diffusivity problem, which can be solved by using the continuum nonlinear PDEs. There are two main PF PDEs for representing the evolution of various PF variables. One being the Allen-Cahn equation [98] for solving non-conserved order parameters (e.g., phase regions and grains), and the other one being the Cahn-Hilliard equation [99] for solving conserved order parameters (e.g., concentrations).

Since the diffusion of constituent elements controls the process of phase separation, we only need to track the conserved variables, i.e., Fe, Cr, and Co concentration, during isothermal spinodal phase decomposition. Thus, our model will be governed by Cahn Hilliard equations. The PF model in this work is primarily adopted from [100]. For the spinodal decomposition of the Fe-Cr-Co ternary system, the Cahn-Hilliard equations are,

𝜕𝑐𝑐r by at=∇⋅𝑀 c r.c r∇⋅ 𝛿𝐹𝑡o𝑡by 𝛿𝑐𝑐r+∇⋅𝑀cr,co∇𝛿𝐹𝑡o𝑡by 𝛿𝑐𝑐o, (1)

𝜕𝑐𝑐o by at=∇⋅𝑀 c o.c r∇⋅ 𝛿𝐹𝑡o𝑡by 𝛿𝑐𝑐r+∇⋅𝑀co,co∇𝛿𝐹𝑡o𝑡by 𝛿𝑐𝑐o. (2)

The microstructure evolution is primarily driven by the minimization of the total free energy Ftot of the system. The free energy functional, using N conserved variables ci at the location 𝑟𝑟⃗ is described by:

Ftot = ∫𝑟⃗ [ 𝑓𝑙oc (𝑐1,…,𝑐𝑁,𝑇)+𝑓𝑔𝑟(𝑐1,…,𝑐𝑁,)]𝑑𝑟⃗ 𝐸𝑒𝑙. (3)

𝑓𝑔r=kby2∑Ni |∇𝑐|2, (4)

where κi is the gradient energy coefficient. In this case, κ is considered a constant value. floc is the local Gibbs free energy density as a function of all concentrations, ci, and temperature, T. For this work, we will model the body-centered cubic phase of Fe-Cr-Co, where the Gibbs free energy of the system is described as [100],

𝑓𝑙o𝑐=𝑓0𝐹e 𝐶𝐹𝑒+𝑓0cr ccr+𝑓0co cco+𝑅𝑇(𝐶𝐹𝑒inc 𝐹e+ccr inc cr +cco inc co)+𝑓𝑒+𝑓mg, (5)

where fi 0 is the Gibbs free energy of the pure element i and fE is the excess free energy defined by

𝑓𝐸=𝐿𝐹𝑒,cr 𝐶𝐹𝑒 ccr +𝐿𝐹e,co𝐶𝐹ecco +𝐿𝐶r,cocr cco, (6)

where LFe,Cr, LFe,Co, and LCr,Co are interaction parameters. fmg is the magnetic energy contribution and can be expressed as

𝑓𝑚𝑔+𝑅𝑇In(β+1)𝑓(𝜏), (7)

where β is the atomic magnetic moment, f(τ) is a function of τ ≡ T/TC. TC is the Curie temperature. Eel in Eq. (3) is the elastic strain energy added to the system and is expressed as

𝐸𝑒𝑙+1by2∫ 𝑟⃗𝐶𝑖 jkl 𝜀 el kl (𝑟⃗,t)d𝑟⃗, (8)

𝜀el ij (𝑟⃗, 𝑡)=𝜀cij (𝑟⃗,t)-𝜀0j(𝑟⃗, 𝑡), (9)

𝜀0ij(𝑟⃗, 𝑡)=[𝜀cr(ccr(𝑟⃗, 𝑡)-c0cr)=𝜀co(cco(𝑟⃗, 𝑡)-c0co)]𝛿𝑖j, (10)

where 𝜀cr and 𝜀co are lattice mismatches between Cr with Fe and Co with Fe,

respectively. 𝐶0𝑐andC0c0 are the initial concentrations of Cr and Co, respectively and 𝛿𝑖j is the Kronecker delta. The constrained strain, 𝜀𝑖j(𝑟⃗, 𝑡),is solved using the finite element method. 𝑀𝑖j in Eq. (2) are Onsager coefficients and are scalar mobilities from the coupled system involving the concentrations. They can be determined by [100],

𝑀𝐶r,𝐶r = [𝑐𝐹𝑒 ccr𝑒 𝑀𝐹+ (1 −𝐶cr)2𝑀cr+ccr cco 𝑀co] 𝐶𝐶rby rt, (11)

𝑀co,co=[CfeCco Mfe+ccr cco Mcr+(1-Cco)2 Mco]Ccobyrt, (12)

MCr Co = MCo.cr =𝑀Co ,cr=[Cfe,Mfe- (1− Cr)MCr-(1− Cco)MCo]CCr Cco by rt

(13)

The mobility Mi of each element i is determined by

Mi=D0i exp (-Qi by KBT), (14)

where 𝐷0i is the self-diffusion coefficient and 𝑄𝑄𝑖𝑖 is the diffusion activation energy. We parametrized the model with the calculation of phase diagram (CALPHAD) data [100]. To solve the non-linear CH partial differential equations (PDEs), we used the Multi-physics Object-Oriented Simulation Environment (MOOSE). MOOSE is an open source finite element package developed at Idaho National Laboratory and efficient for parallel computation on supercomputers [101]. The coupled CH equations were solved with the help of MOOSE’s prebuilt series of weak form residuals of CH PDEs with the input parameters given in Table 2.1.

Table 2.1 Phase-field model input parameters [100, 102, 103]

Dataset Generation

Dataset Generation for Steady State Case Study

Since the compositions are subject to the constraint that they must sum to one, the dataset was produced based on the mixture design as a design of experiments method [104]. The Simplex-Lattice [105] designs were adopted to provide the data for simulation. The simulation variables and their range of values are given in Table 2.2. The simulations were run on Boise State University R2 cluster computers [106] using the MOOSE framework [101].

Table 2.2 Simulation variables and their range of values for database generation of steady state case study.

After running the simulations, the micro structures were collected from the results showing the phase separation. The extracted micro structures for Fe, i.e., the morphology of Fe distribution, from the PF simulations, along with the minimum and maximum compositions of Fe in each microstructure, are utilized as the inputs to predict spinodal temperature, Cr, and Co compositions as processing history parameters. Indeed, the input data is a mixed dataset combined of microstructures, as image data, and Fe composition, as numerical or continuous data. Since these values constitute different data types, the machine learning model must be able to ingest the mixed data. In general, handling the mixed data is challenging because each data type may require separate preprocessing steps, including scaling, normalization, and feature engineering [107].

Dataset Generation for Unsteady State Case Study

To develop proper training and test datasets, we need to span the possible ranges of input variables, i.e., time, temperature, and chemical compositions. For the temperature, we are bonded to the range of 850 – 970 K, as spinodal decomposition in Fe-Cr-Co happens in this window. For chemistry, we explore the range of 0.05-0.9 at. % for both Cr and Co.

Since the chemistry is subjected to the conservation of mass constrain, i.e., cFe+cCr+cCo = 1, we used the Simplex-Lattice [105] as a mixture design method to generate the chemistry space to explore. Finally, we bounded the dataset to 300 hours for the time, as our study showed most microstructures would reach equilibrium to some extent by this time. Unlike temperature and chemistry, we did not grid the time domain linearly because the microstructure is very sensitive to aging time in the early stages of annealing, but this sensitivity drops dramatically as time passes. Therefore, we picked a fine grid at the beginning, 50 s, and increased it exponentially, to 100000 s, with time.

The variables and their ranges are given in Table 2.3. To cover all the range of input variables, the dataset was generated based on the design of the experiment (DOE). We generated the microstructures by solving the CH PDEs using the MOOSE framework [101]. The simulations were run on different clusters including Boise State University R2 cluster computers [106], Boise State University BORAH [108], and the Extreme Science and Engineering Discovery Environment (XSEDE) (Jetstream2 cluster), which is supported by National Science Foundation (NSF) [109] using the MOOSE framework [101]. We note that because of the deterministic nature of the PF technique, i.e., not being stochastic, and the physics of the spinodal decomposition, we only need to run each condition once.

Table 2.3 Simulation variables and their range of values for database generation of unsteady state case study.

After simulations, we collected the morphology of Fe distribution, which represents the Fe-rich and Fe-depleted, i.e., Cr-rich, regions, as image data. In addition, we used the minimum and maximum compositions of Fe in each microstructure as numeric data. The deep network uses images and numeric data as input to predict the time, temperature, and chemical compositions. Therefore, different types of deep networks like convolutional and fully-connected layers are required to process the input data. We note that the accuracy of the model will increase for real materials if some experimental data is added to the training dataset. However, even having the experimental dataset to be just a few percent of the whole dataset, requires hundreds of tailored transmission electron microscopy (TEM) images. Generating such a big experimental dataset is time consuming and costly. Therefore, in this work, we limit the model to synthetic data. 23 However, as we will show in the validation part, the model predicts the history of an experimental TEM image pretty well, because we are using a CALPHAD-informed phase field model to generate the training and test dataset, and CALPHAD is inherently informed by some experimental data.

Dataset Generation for Microstructure Evolution Case Study

The produced microstructures in the unsteady state case study are also used as training, validation, and test data in this study. The Fe-based composition microstructure morphologies sequences are utilized to construct the dataset. The length of each sequence is 20 microstructures; the first 10 microstructures until 30 hr of the process are used to predict the future 10 microstructures until 300 hr.

Deep Learning Methodology

Deep learning (DL), as an artificial intelligence (AI) tool, is usually used for image and natural language processing as well as object and speech recognition based on human brain mimicking [49, 110]. Indeed, DL is a deep neural network that can be applied for supervised, e.g., classification and regression tasks, and unsupervised, e.g., clustering, learning. In this work, since we have two different data types as input, two various networks are needed for data processing. The numerical data is fed into fully-connected layers while image features are extracted through the convolutional layers. For images involving a large number of pixel values, it is often not feasible to directly utilize all the pixel values for fully-connected layers because it can cause overfitting, increased complexity, and difficulty in model convergence. Hence, convolutional layers are applied to reduce the dimensionality of the image data by finding the image features [73, 111].

Fully-Connected Layers

Fully-connected layers are hidden layers consist of hidden neurons and activation function [112]. The number of hidden neurons is usually selected based on trial and error.

The neural networks can predict complex nonlinear behaviors of systems through activation functions. Any nonlinear function that is differentiable can be used as an activation function. However, there are some activation functions such as rectified linear (ReLU), leaky rectified linear, hyperbolic tangent (Tanh), sigmoid, Swish, and softmax that have been successfully used in different applications in neural networks [113]. In particular, ReLU (f(x) = max (0, x)) and Swish (f(x) = x sigmoid(x)) activation functions have been recommended for hidden layers in deep neural networks [114].

Convolutional Neural Networks (CNN)

A convolutional neural network (CNN) is a deep network that is applied for image processing and computer vision tasks. For the first time, LeCun et al. proposed using CNN for image recognition [115]. CNN, like other deep neural networks, consists of input, output, and hidden layers. But the main difference lies in the use of hidden layers consisting of convolutional, pooling, and fully-connected layers that follow each other. Several convolutional and pooling layers can be designed in the CNN architectures. Convolutional layers can extract the salient features of images without losing the information. At the same time, the dimensionality of the generated data gets reduced and then fed as input to the fully-connected layer. Two significant advantages of CNN are parameter sharing and sparsity of the connections. A schematic diagram for CNN is given in Figure 2.1.

The convolutional layer consists of filters that pass over the image and scanning the pixel values to make a feature map. The produced map proceeds through the activation function to add nonlinearity property. The pooling layer involves a pooling operation, e.g., maximum or average, which acts as a filter on the feature map. The pooling layer reduces the size of the feature map by pooling operation. Different combinations of convolutional and pooling layers are usually used in various CNN architectures. Finally, the fully-connected layers are added to train on image extracted features for a particular task such as classification or regression.

Figure 2.1

Schematic of a typical convolutional neural network.

Similar to other neural networks, a cost function is used to train a CNN and update the weights and biases by back propagation. There are many hyperparameters such as the number of filters, size of filters, regularization values, dropout values, optimizer parameters, initial weights, and biases that must be initialized before training. Training a

CNN usually needs an extensive training dataset that is not always available for all applications. In this situation, transfer learning can be helpful in developing a CNN. In transfer learning, all or part of a pretrained network like VGG16, VGG19 [64], Xception [65], ResNet [66], and Inception [67], which were trained by computer vision research community with lots of open source image datasets such as ImageNet, MS, CoCo, and Pascal, can be used for the desired application. The state-of-the-art pretrained network is EfficientNet which was proposed by Tan and Le [116].

This method is based on the idea that scaling up the CNN can increase its accuracy [117]. Since there was no complete understanding of the effect of network enlargement on the accuracy, Tan and Le proposed a systematic approach for scaling up the CNNs. There are different ways to scale up the CNNs by their depth [117], width [118], and resolution [119]. Tan and Le proposed to scale up all the depth, width, and resolution factors for the CNN with fixed scaling coefficients. [116]. The results demonstrated that their proposed network, EfficientNet-B7, had better accuracy than the best-existing networks while using 8.4 times fewer parameters and performing 6.1 times faster. In addition, they provided other EfficientNet-B0 to -B6, which can overcome the models with the corresponding scale such as ResNet-152 [117] and AmoebaNet-C [120] in terms of accuracy with much fewer parameters. Due to the outstanding performance of EfficientNet, although it is trained based on the ImageNet dataset which is completely different from materials micro structures, it seems the EfficientNets convolutional layers have the potential to extract the features of images from other sources like materials micro structures.

Proposed Model for Steady State Case Study

The training and test datasets are produced using the PF method. In this work, two different algorithms, including CNN and transfer learning, were proposed to extract the salient features of the microstructure morphologies. We applied a proposed CNN (Figure 2.2) or part of pretrained EfficienctNet B-6 and B-7 convolutional layers (Figure 2.3) to find the features of the microstructures. The architecture of the proposed CNN was found by testing different combinations of convolutional layers and their parameters based on the best accuracy. In the transfer learning part, different layers of the pretrained convolutional layers were tested to find the best convolutional layers for feature extraction.

On the other hand, the minimum and maximum Fe composition in the microstructure, as numerical data, is fed into the fully-connected layers. The extracted features from micro structures and the output of the fully-connected layers are combined to feed other fully-connected layers to predict the processing temperature and initial Cr and Co compositions. Different hyper parameters such as network architecture, cost function, and optimizer are tested to find the model with the highest accuracy. The model specifications, compilations (here loss function, optimizer, and metrics), and cross validation parameters are listed in Table 2.4.

Figure 2.2

The flowchart of the developed model for chemistry and processing

history prediction from microstructure images (FC: fully-connected layer)

Table 2.4 Parameters selected for model specification, compilation, and cross validation.

Figure 2.3 The flowchart of the developed model for chemistry and processing

history prediction from microstructure images (FC: fully-connected layer)

Proposed Model for Unsteady State Case Study

In this study, different in-house CNNs or different layers of pretrained convolutional layers of Efficient Net have been adopted to extract microstructure features. The proposed deep network in the framework includes different in-house CNNs or pretrained convolutional layers from EfficientNet-B7 (transfer learning) for micro structure feature extraction and fully-connected layers for processing of the extracted features and numeric data (Iron minimum and maximum composition in the micrographs). CNNs with different convolutional layers are applied for microstructure feature extraction in the in house CNNs. In transfer learning, different layers of pretrained convolutional networks are tested to find the optimum number of layers based on the overall accuracy.

The architecture of the proposed network is found by testing different combinations of convolutional, fully-connected layers and their parameters based on the best accuracy. A schematic flowchart of the proposed framework is given in Figure 2.4. The extracted features of microstructures are passed through fully-connected layers to get combined with the output of the fully-connected layers that proceed the numeric data. The network is trained by the end-to-end method to find the optimum hyperparameters. The model parameters and specifications are the same as in Table 2.4, and only the output dimension will be 4.

Figure 2.4

The flowchart of the developed model for chemistry, time, and

temperature prediction from microstructure images (FC: fully-connected layer)

Proposed Model for Micro structure Evolution Prediction

Prediction of microstructure evolution is a spatiotemporal problem. Different network architectures, which can generally be grouped into three categories: feed-forward models based on CNNs, recurrent models, and others such as the combinations of convolution and recurrent networks, as well as the Transformer-based and flow-based methods, are used to encode different inductive biases into neural networks for spatiotemporal predictive learning [121]. The inductive bias of group invariance over space has been brought to spatiotemporal predictive learning through the use of convolutional layers. For next frame prediction in Atari games, Oh et al. [122] defined an action-conditioned autoencoder with convolutions. The Cross Convolutional Network, developed by Xue et al. [123], is a probabilistic model that stores motion data as convolutional kernels and learns to predict a likely set of future frames by understanding their conditional distribution. In order to complete the crowd flow prediction challenge, Zhang et al. [124] suggested using CNNs with residual connections. It specifically takes into account the proximity, duration, trend, and external elements that affect how population flows move. Additionally, the convolutional architectures are employed in tandem with the generative adversarial networks (GANs) [125], which successfully lowered the learning process’ uncertainty and enhanced the sharpness of the generated frames. Most feed-forward models demonstrate greater parallel computing efficiency on large-scale GPUs compared to recurrent models [126-128].

However, these models generally fail to represent long term reliance across distant frames since they learn complex state transition functions as combinations of simpler ones by stacking convolutional layers.

Some helpful insights into how to forecast upcoming visual sequences based on historical observations are provided by recent developments in RNNs. In order to forecast future frames in a discrete space of patch clusters, Ranzato et al. [129] built an RNN architecture that was influenced by language modeling. As a remedy for video prediction, Srivastava et al. [130] used a sequence-to-sequence LSTM model from neural machine translation [131]. Later, other approaches to describe temporal uncertainty or the multimodal distribution of future frames conditioned on historical observations have been presented, by integrating variational inference with 2D recurrence [132-135].

By arranging 2D recurrent states in hierarchical designs, certain additional techniques successfully increased the forecast time horizon [136]. The factorization of video information and motion is another area of research, typically using sequence-level characteristics and temporally updated RNN states [137]. The use of optical flows, new adversarial training schemes, relational reasoning between object-centric content and pose vectors, differentiable clustering techniques, amortized inference enlightened by unsupervised image decomposition, and new types of recurrent units constrained by partial differential equations are typical approaches [138-143]. The aforementioned techniques work well for breaking down dynamic visual scenes or understanding the conditional distribution of upcoming frames. To describe the spatiotemporal dynamics in low-dimensional space, they primarily use 2D recurrent networks, which inadvertently results in the loss of visual information in actual circumstances.

Shi et al. [144] created the Convolutional LSTM (ConvLSTM), which substitutes convolutions for matrix multiplication in the recurrent transitions of the original LSTM to combine the benefits of convolutional and recurrent architectures. An action-conditioned ConvLSTM network was created by Finn et al. [145] for visual planning and control.

Shi et al. [146] coupled convolutions with GRUs and used non-local neural connections to expand the receptive fields of state-to-state transitions. Wang et al. [147] introduced a higher-order convolutional RNN that uses 3D convolutions and temporal self-attention to describe the dynamics and includes a time dimension in each hidden state. Su et al. [148] increased the low-rank tensor factorization-based higher-order ConvLSTMs’ computational effectiveness. Convolutional recurrence provides a platform for further research by simultaneously modeling visual appearances and temporal dynamics [149 154]. The spatiotemporal memory flow, a novel convolutional recurrent unit with a pair of decoupled memory cells, and a new training method for sequence-to-sequence predictive learning are all used to enhance the existing architectures for action-free and action-conditioned video prediction in Predictive Recurrent Neural Network (PredRNN) [93].

A network component known as a memory cell is crucial in helping stacked LSTMs solve the vanishing gradient issue seen by RNNs. It can latch the gradients of hidden states inside each LSTM unit during training, preserving important information about the underlying temporal dynamics, according to strong theoretical and empirical evidence.

However, the spatiotemporal predictive learning task necessitates a distinct focus on the learned representations in many areas from other tasks of sequential data; therefore, the state transition pathway of LSTM memory cells may not be optimum. First, rather than capturing spatial deformations of visual appearance, most predictive networks for language or speech modeling concentrate on capturing the long-term, non-Markovian features of sequential data [155, 156]. However, both space-time data structures are essential and must be carefully considered in order to forecast future frames.

Second, low-level features are less significant to outputs in other supervised tasks using video data, such as action recognition, where high-level semantical features may be informative enough. The stacked LSTMs don’t have to maintain fine-grained representations from the bottom up because there are no complex structures of supervision signals. Although the current inner-layer memory transition-based recurrent architecture can be sufficient to capture temporal variations at each level of the network, it might not be the best option for predictive learning, where low-level specifics and high-level semantics of spatiotemporal data are both significant to generating future frames. Wang et al.

[93] proposed a new memory prediction framework called PredRNN, which extends the inner layer transition function of memory states in LSTMs to spatiotemporal memory flow.

This framework aims to jointly model the spatial correlations and temporal dynamics at different levels of RNNs. All PredRNN nodes are traversed by the spatiotemporal memory flow in a zigzag pattern of bi-directional hierarchies: A newly created memory cell is used to deliver low-level information from the input to the output at each time step, and at the top layer, the spatiotemporal memory flow transports the high-level memory state to the bottom layer at the following timestep. The Spatiotemporal LSTM (ST LSTM), in which the proposed spatiotemporal memory flow interacts with the original, unidirectional memory state of LSTMs, was therefore established as the fundamental building element of PredRNN.

It seems that they would require a unified memory mechanism to handle both short-term deformations of spatial details and long-term dynamics if they anticipated a vivid imagination of numerous future images: On the one hand, the network may learn complex transition functions within brief neighborhoods of subsequent frames thanks to the new spatiotemporal memory cell architecture, which also increases the depth of nonlinear neurons across time-adjacent RNN states. Thus, it considerably raises ST-modeling LSTM’s capacity for short-term dynamics. To achieve both long-term coherence of concealed states and their fast reaction to short-term dynamics, ST-LSTM, on the other hand, still uses the temporal memory cell of LSTMs and closely combines it with the suggested spatiotemporal memory cell. Schematics of the PredRNN architecture and the ST-LSTM unit with twisted memory states are given in Figure 2.5.

On five datasets—the Moving MNIST dataset, the KTH action dataset, a radar echo dataset for precipitation forecasting, the Traffic4Cast dataset of high-resolution traffic flows, and the action-conditioned BAIR dataset with robot-object interactions—the proposed methodology demonstrated state-of-the-art performance. The original paper [93] contains information about the investigation in detail. This dissertation adopts the PredRNN to predict the microstructure evolution in a split second.

Figure 2.5 Left: the main architecture of PredRNN, in which the orange arrows denote the state transition paths of Ml t, namely the spatiotemporal memory flow. Right: the ST LSTM unit with twisted memory states serves as the building block of the proposed PredRNN, where the orange circles denote the unique structures compared with ConvLSTM (the figure was adopted from the original study [157]).

Measure the Similarity Between Images

Perhaps the most fundamental process underpinning all of computing is the capability to compare data elements. It is not particularly challenging in many fields of computer science; for example, binary patterns may be compared using the Hamming distance, text files can be compared using the edit distance, vectors can be compared using the Euclidean distance, etc. Even the seemingly straightforward operation of comparing visual patterns is still an open problem, which makes computer vision a particularly difficult field to study. Visual patterns are not just exceedingly high-dimensional and strongly correlated, but the idea of visual similarity itself is frequently arbitrary and intended to emulate human visual perception [158]. In order to compare the various outcomes of the experiments when working on computer vision tasks, we must select a method for measuring the similarity between two images. Objective quality or distortion assessment techniques can be divided into two main categories. The first category includes metrics that may be expressed quantitatively, such as the frequently used mean square error (MSE), peak signal to noise ratio (PSNR), root mean square error (RMSE), mean absolute error (MAE), and signal-to-noise ratio (SNR). In an effort to include measurements of perceptual quality, the second class of measurement techniques takes into account the properties of the human visual system (HVS) [159].

The mean-square error estimator is the most common. The average squared difference between the anticipated values (estimated values) and the actual value is measured by MSE (ground truth). Therefore, we just square the differences between each pixel. However, this only works well if we want to create a picture with the best pixel colors consistent with the real-world image. We occasionally like to focus on the picture’s structure or relief [158].

The second conventional estimator is PSNR (Peak Signal to Noise Ratio). All pixel representation values must be converted to bit form to utilize this estimator. The values of the pixel channels must range from 0 to 255 if we are using 8-bit pixels. By the way, the RGB color model, sometimes known as red, green, and blue, suits the PSNR the best.

The PSNR metric displays the relationship between a signal’s maximum achievable power and the power of corrupting noise that compromises the accuracy of its representation [159].

However, PSNR, a variant of MSE, continues to focus on the pixel-by-pixel comparison. Another technique for image similarity quantification is the structural similarity approach (SSIM). SSIM and the effectiveness and perception of the human visual system are connected (HVS color model). The SSIM represents picture distortion as a combination of three elements, namely loss of correlation, luminance distortion, and contrast distortion, as opposed to utilizing conventional error summation techniques [159].

Convolutional neural networks’ hidden variables have recently been demonstrated to be an effective measure of perceptual similarity that accurately predicts human perception of relative picture similarity. The perceptual similarity between two images is assessed using the Learned Perceptual Image Patch Similarity (LPIPS). In essence, LPIPS determines how comparable two picture patches’ activations are for a given network. This measurement has been demonstrated to reflect human perception closely. Image patches with a low LPIPS score are perceptually similar [158].

In material science, there are other methods for microstructure assessment, including two-point correlation function, chord length distribution, etc. In addition to index values such as MSE, PSNR, SSIM, and LPIPS, two-point correlation function [160] and chord length [161] are used for distribution comparison between two images in this dissertation. In two-point correlation, the local state and local state space can be used to digitize the microstructure images [162]. Local space (h) is the attributes that are needed to completely identify all relevant material properties for the selected length scale and can be defined as follows:

ℎ =(𝜌,𝑐𝑖) (15)

Where ρ is a phase identifier (α, β, γ, …) and 𝑐𝑐𝑖𝑖 represents chemical composition. The complete set of all theoretically possible local states in a selected material system is the local state space (H) [163].

𝐻 ={(𝜌,𝑐𝑖)||𝜌 𝜀{𝛼,𝛽,𝛾,…},𝑐𝑖 𝜀𝐶𝑓i} (16)

Representation of the microstructure as a function h(x, t) specifies the local state present at every spatial position x and time t. In practice, all microstructure characterization techniques probe the local state in the materials over a finite volume and a finite time interval. It is impractical to implement this function in practice due to the resolution limits and uncertainty inherent to the characterization techniques used. In addition, the local state can only be characterized as an average measure over a finite probe volume and finite time step. The problem raises is the fact that the local state in any particular pixel or voxel at any particular time step may not be unique [164]. To solve the mentioned issues, microstructure function m(h, x) is defined as the probability density associated with finding local state h at the spatial location x at time t. It captures the probability of finding one of the local states that lie within a small interval dℎ centered around ℎ at a selected x. m(ℎ, x) dℎ dx would represent the probability and m(ℎ, x) the corresponding probability density [165]. The desired information for the evaluation of m(h, x) is usually discrete values.

The microstructure image on a square lattice can be represented by pixel in two dimensional (2D) images and voxel in three-dimensional (3D) images. In this case, the microstructure images are expressed by arrays that each element of the array has a value based on that pixel or voxel brightness. Then, enough sampling grid is needed to capture rich attributes from the material internal structure. The different phases in the material microstructure can be represented by special values. For example, Figure 2.6a shows a real two-phase microstructure that can be depicted by a binary image (black and white).

Figure 2.6 (a) A real two-phase micro structure, (b) and (c) a simple checkerboard microstructure for presenting X_uv and two-point correlation (white color is phase 1 and black color is phase 2)

If X indicates the micro structure on a square lattice, it can be displayed mathematically as follows:

𝑋𝑢v = {1, 0 ,𝑖𝑓u v ∈ phase 1 otherwise (17)

uv is the pixel index and represents the pixel location in the microstructure image. In the two-point correlation function, as a simple n-point correlation method, the correlation between two random points in the microstructure that can be specified by vector r are evaluated as follows:

𝑓r np,uv =〈Xnuv,Xpuv+ 〉 (18)

Where 〈.〉 is the expectation operator. A simple example for 𝑋𝑋𝑢𝑢𝑢𝑢 and two-point correlation has been presented in Figures 1b and 1c. Since we are dealing with discrete values, the expectation can be defined as:

(19)

𝑓r𝑛𝑝 uv is the conditional probability of finding local state n at bin uv given finding local state p at bin uv+r. This definition can be extended for three, four, or n-point correlation function. If there is a periodic micro structure, 𝑓rnp,uv is independent from uv. For a two-phase material, there are 𝑓r11, 𝑓r12, 𝑓r21, and 𝑓r22.

𝑓r𝑛p=[fr11,fr12,fr21,fr22] (20)

The lineal-path function is an additional statistical function that can help for microstructure characterization. The lineal-path function quantifies the clusteredness of the straight lines in the microstructure. In fact, form probabilistic point of view, the probability that a line drawn on the microstructure will be completely in one phase is calculated [166, 167]. It can be calculated by different methods like chords distribution [166] or Monte Carlo simulation [168]. The lineal-path function in a microstructure is linearly independent unlike two-point correlation which is more effective for phases recognition. The second derivative of lineal-path function is chord-length distribution (CLD) which is also used for microstructure quantification [169]. The lineal-path function cannot show the connectivity of the phases accurately because just linear connections are considered in this method. In addition, these linear connections are measured in the certain directions. Some studies tried to apply different methods to evaluate it in the multiple directions [170-172]. Despite these weaknesses, the lineal-path function has been applied for micro structure characterization in different studies [166, 173]. The CLD function which can be derived from lineal-path function was used by Popova et al. [174] to quantify the material structure in additive manufacturing. Some researchers have reported that the lineal-path function and the two-point cluster correlation function is useful for finding clusters in the microstructures [166, 173, 175].

CHAPTER THREE: DEEP LEARNING APPROACH FOR CHEMISTRY AND PROCESSING HISTORY PREDICTION FROM MATERIALS MICROSTRUCTURE